Distributed Lens Architecture

17 min read

There is a problem that is common to databases , user interface , programming languages and pretty much everything that involves state in more than 1 location. It's called the "view update problem". Or in a more general sense: (Bidirectional) Model Transformation . In the functional programming world, this is modelled via a concept called Lenses. It turns out that this elegant idea, is a great way to model distributed system architectures and the pattern appears in many contexts.

Simply put, bidirectional transformation occurs whenever you want consistency between tranformations of state in one location to transformations of state in another location. In this situation, location doesn't just mean geographical location, but abstract location in terms of form and style.

For example, we might have a dictionary of some items (our pseudolanguage uses

== as a comparison operator):

items = {

a = 'apple',

b = 'banana',

c = 'cucumber'

}

This becomes our first location of state. We then extract our second location of

state which is just the item a:

itemA = items[a];

We execute a transformation on the itemA:

itemA = 'apricot';

And here is our intention: we want both of our "state-locations" to be simultaneously transformed:

// second location has been transformed

itemsA == 'apricot'

// causing our original first location to be transformed

items == {

a = 'apricot',

b = 'banana',

c = 'cucumber'

}

The question is, how do we do that? The answer is of course lenses.

Lenses

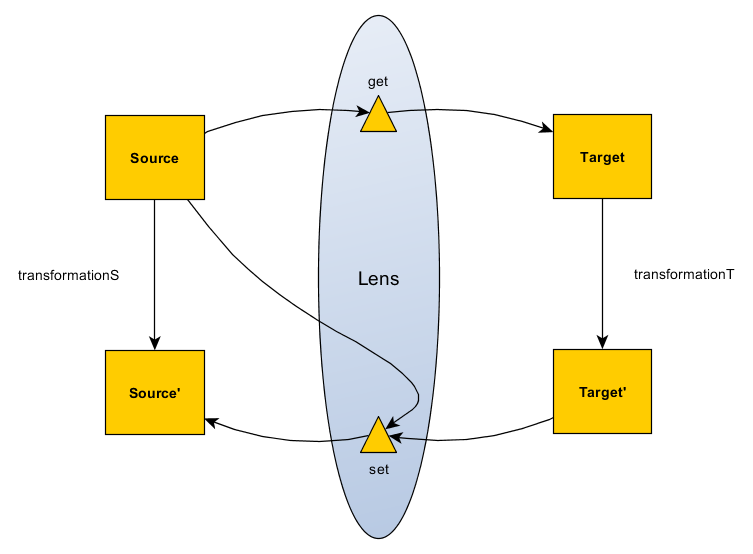

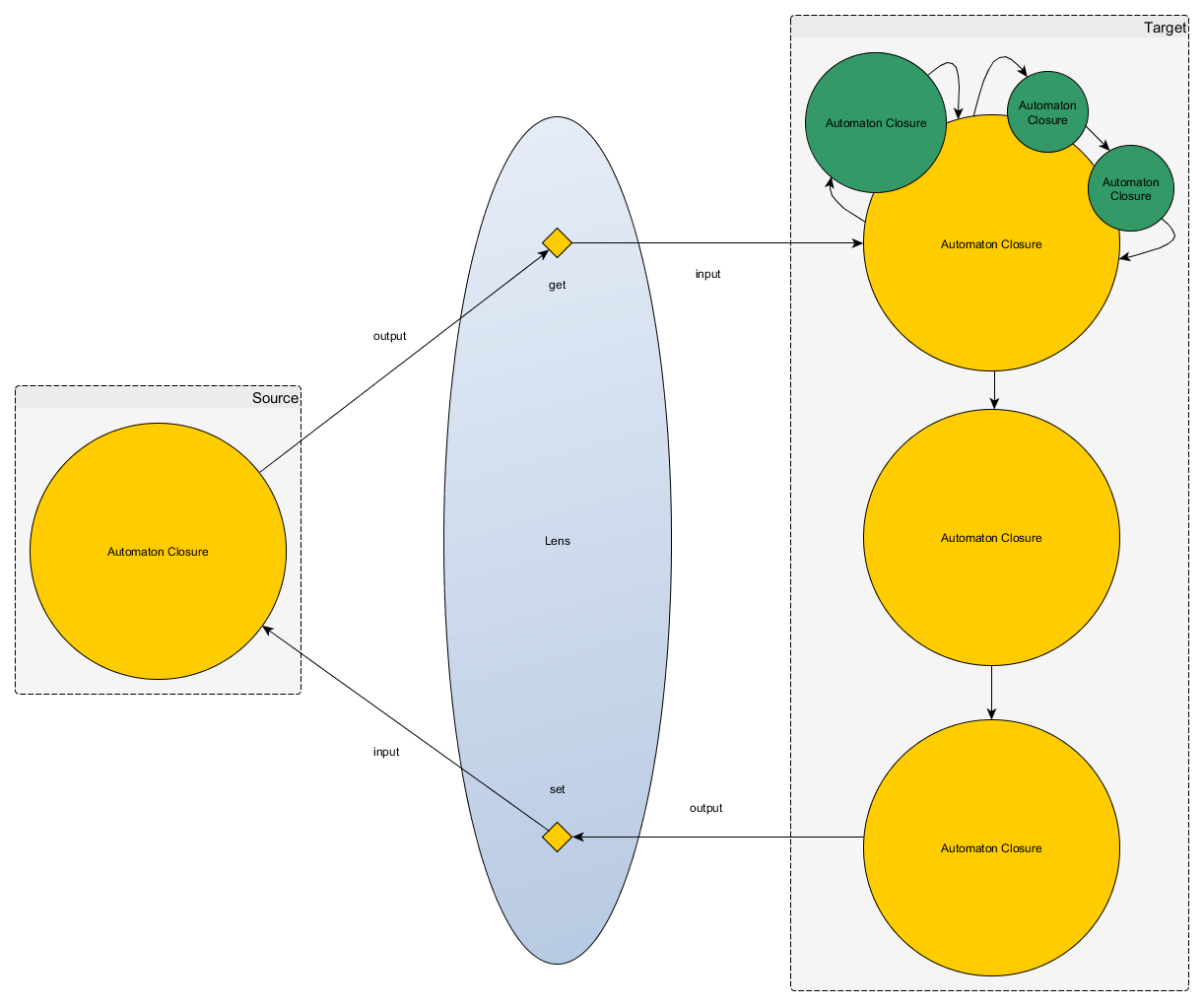

The definition of a Lens basically describes this bidirectional transformation

concept via 2 primitives, a get and a set. We have 2 state locations, the

first is called Source, the second Target. Target' is the new updated

target after a transformation, and the Source' is the new updated source after

transformation. The source transformation only occurs after the target gets

transformed.

The set function takes a product of Source and Target'

(set :: T x S -> S). This because implementation wise, once you have the

updated target, a pure set function would need to merge both the original source

and new target in order to get the new source.

The name Lens comes from Benjamin Pierce's work on bidirectional transformations . The link gives a better, more detailed and rigorous explanation of lenses.

Lenses are usually implemented as domain specific languages. The ideal lens

syntax is bidirectional, that is a left to right reading denotes a get

function, and a right to left reading denotes a set function. You may notice

that this sounds similar to unification in logic languages (which is an

interesting segue into Architect's logic language capabilities).

The purpose of this post is to show how the ideas in Lens theory and Bidirectional Transformations are useful when it comes to modeling Matrix's Functional Reactive Infrastructure and thus establishes some perspective regarding the primitives that will be available inside the Forge Architect language. The below will use a bit of Haskell because this is where we got the Lens idea from, but you don't really have to understand Haskell, just the meaning.

Simon Peyton Jones, one of the main contributors to Haskell gave a talk introducing a lens library written by Edward Knett: https://skillsmatter.com/skillscasts/4251-lenses-compositional-data-access-and-manipulation

If you were to go about implementing Lens functionality, your first idea might be just a record type that has 2 functions inside of it:

data NaiveLens source target = NaiveLens {

get :: source -> target,

set :: target -> source -> source

}

Basically in this case a Lens is just a pair of functions. But this

implementation isn't very efficient because it is not

sufficiently abstract

. If you want to have functions that do say an in-place update, you would have

to get the data then set the data, which both require some pattern matching

runtime cost. You can resolve this by adding an over function to the record,

but what happens if you need to do an in-place update to a Maybe or an IO

value? These would also require its own function inside the record, thus now

making the record rather bulky.

Fortunately there is a way to abstract above this implementation into something

more generalised that takes care of all situations. What if instead the Lens was

just a

type of a single function?

-- this is a van Laarhoven lens

-- any function that has this type signature is a lens!

-- in Haskell the `->` is just a function constructor, and all parameters are curried.

type Lens source target = Functor f => (target -> f target) -> source -> f source

This single type signature covers all the uses of Lens including get, set,

over and over with various higher order types Maybe, IO.. etc. Please

note that this is just a type, it's not the actual function implementation. You

would write a function that matches the above type signature for each lens you

want. Once you have a lens function, your get and set functions would have these

type signatures (read the -- comments if you're not familiar with Haskell):

get :: Lens source target -> source -> target

get lens source = getConst . lens Const

set :: Lens source target -> target -> source -> source

set lens target = runIdentity . lens (Identity . const target)

-- if you're not familiar with Haskell, the above 2 type signatures basically mean:

-- get (lens, old_source) => old_target

-- set (lens, new_target, old_source) => new_source

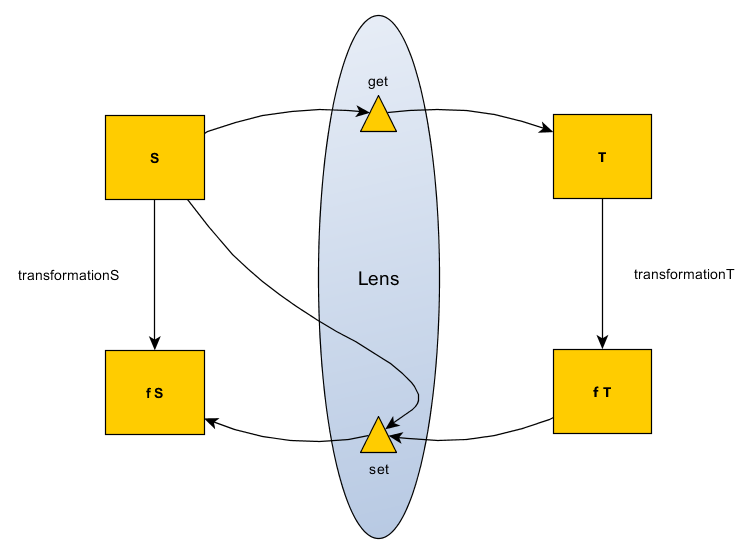

Now comes the intuition. This part isn't really rigorous, it's just how I've

chosen to interpret this Lens type. This types looks like it's specifying a

relationship between a target transformations and source transformations. A

functor is basically anything that implements a "structure

preserving map function". The basic example of a functor is a list. Where when

given a list of a [a] and a function from a to b a -> b, then you can map

fmap that function to operate on a list of a to a list of b [a] -> [b].

There are functors than aren't lists, and their corresponding mapping function

results in different kind of

structure preserving transformations.

To learn more about these concepts, check out

Functors, Applicatives, And Monads In Pictures

.

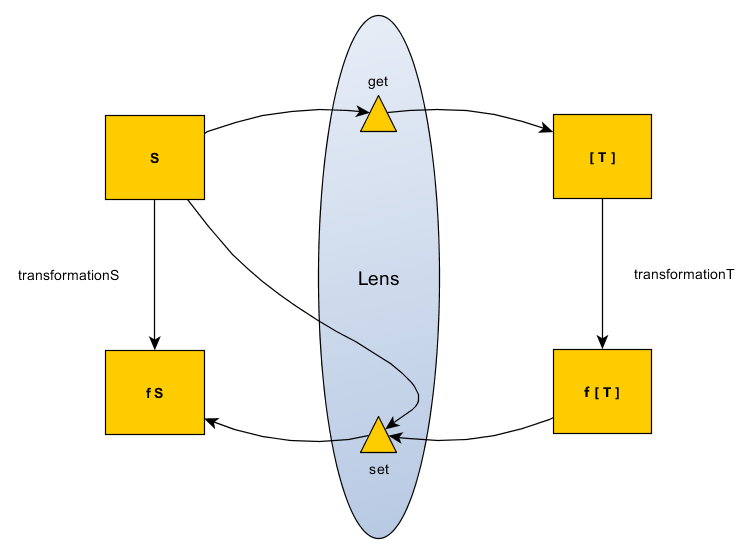

The (target -> f target) -> source -> f source could be interpreted as making

both the target and source into functors which are then "mappable". Any function

implementing this lens type is a function that accepts a function that makes the

target mapped, and returns a function making the source mapped. These mappings

are equivalent to the transformations happening on the lenses.

We can change the meaning of the lens by changing the particular functor that we

use. In fact if we use an Const functor, we can get the get functionality,

and if we use the Identity functor we get the set functionality. This

tutorial goes into the gory details of the Haskell implementation:

http://blog.jakubarnold.cz/2014/07/14/lens-tutorial-introduction-part-1.html

This also gives us another cool ability, instead of just having one focus, we could have foci. We could have lens that focuses on multiple targets based on some condition. Transforming this list of targets still results in a transformation at the source.

We should be able to understand that the get and set are just implementation details. They are not the purpose of lens. I think this intuition captures the real semantic of lens which is a form of mirroring: a transformation on the target has an equivalent transformation on the source. Remember there's nothing stopping us from flipping the lens and changing perspective so the target becomes the source and source becomes the target.

For subsequent diagrams we're going to assume that the set function implicitly

takes the original source and recombines to a new source state. This means we

won't explicitly draw the the arrow S -> set.

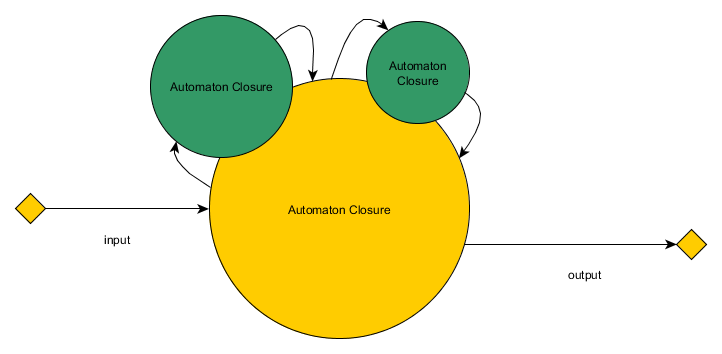

Automata Theory

Before we can understand why lenses matter in a distributed context, we need

another insight. It's the fact that the target and source data structures are

just automatons in

Automata Theory. The get

function from the perspective of the target is equivalent to the input alphabet,

the transformationT is equivalent to a transition function, and the set is

just a matter of returning some value from the final state. In Automata Theory

there can be a plurality of transition functions. There are

many types of automatons,

but here's a diagram of a finite state machine automaton:

The observation we're making here is that both the two sides of the lens (source and target) are automatons and the entire lens itself including the source and target is also an automaton. For reasons that will be clearer as you read, this means that the composition of automatons is itself an automaton.

This shows us that lenses simply model the communication protocol between 2 automatons (but also represent the transition functions of the overall automaton). Furthermore, all computer programs are some form of automaton.

Architecture

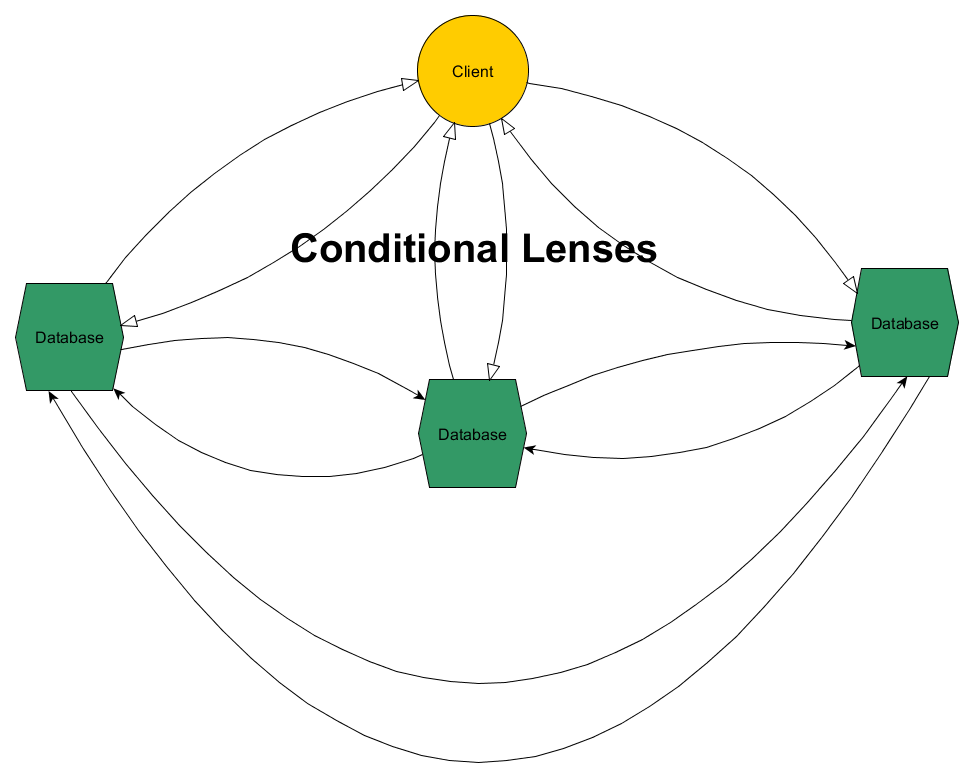

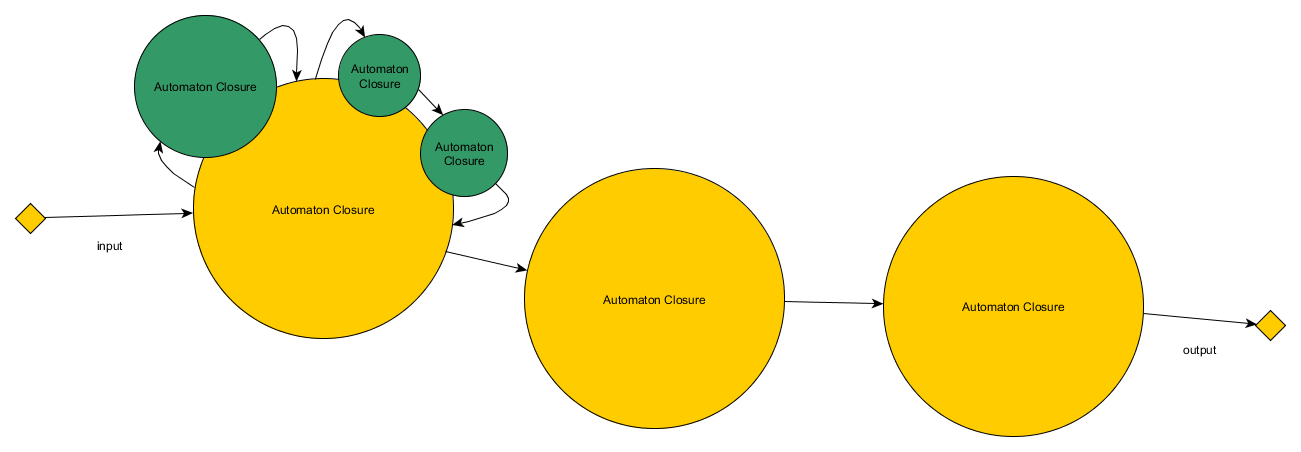

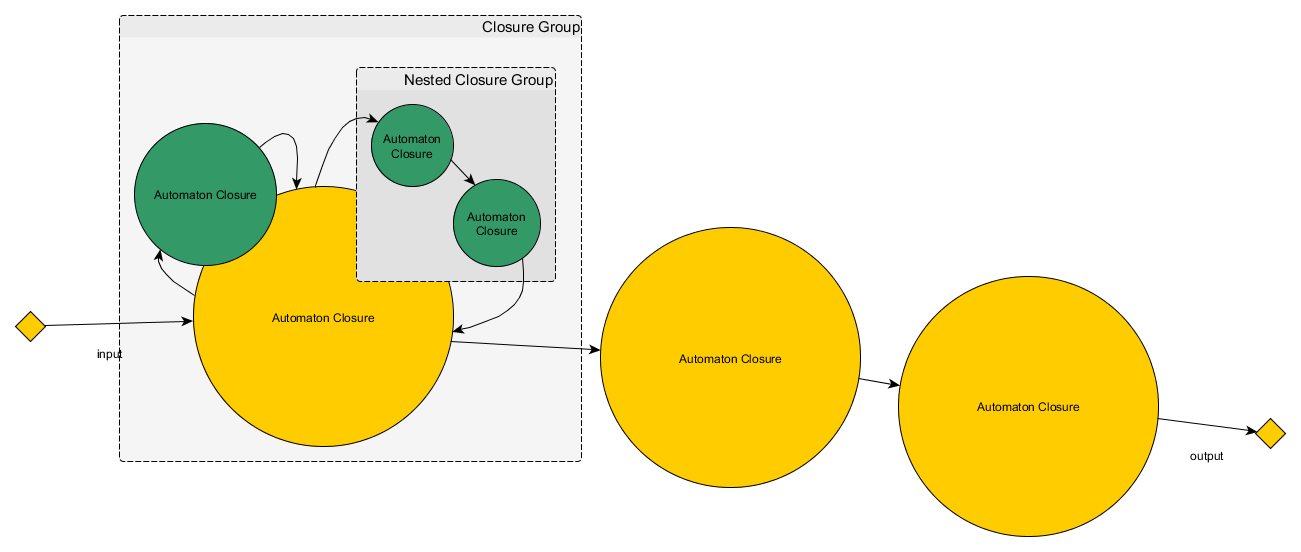

We've identified 3 types of lens patterns that exist in distributed systems. These diagrams do not show transformation/transition functions, that is assumed to be implicit.

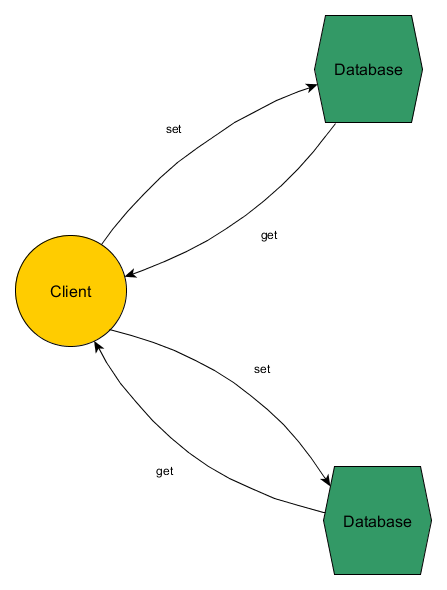

Tree Independent Lenses

This style of lensing commonly appears in web mashup applications and is probably the first iteration of a distributed messaging system. Applications that essentially do RPC or RESTful API calls on remote resources. This is most simplest to implement, but has some major disadvantages. The big problem with this is ensuring heterogenous consistency. Usually when a single client is calling multiple heterogenous databases, these databases have incompatible transactional interfaces. This means even if each independent lens operation is an atomic operation, the composition of these atomic operations is not atomic. You can only make it atomic if you set up co-ordination protocols such as paxos, or phased commit, or cross-database transactions, or some sort of retry with idempotent messages. LinkedIn calls this "Application-Driven Dual Writes".

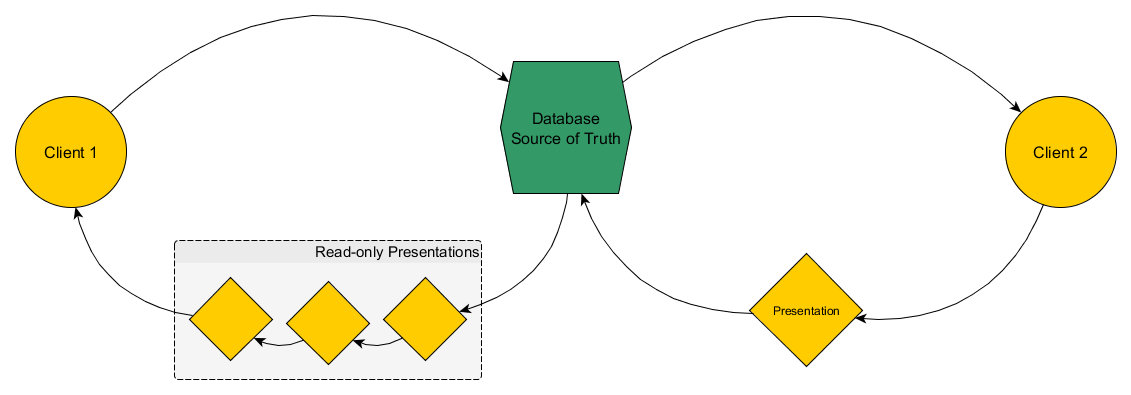

Loop Threaded Lenses

We can solve this heterogenous consistency problem by using a single source of truth that is subscribed by various other read-only databases that are used as "presentations" of the truth. Clients write directly to the truth, but read from the presentations. LinkedIn uses this in their log-centric infrastructure that is powered by Kafka:

I call this a loop thread, because the lens get & set loop, threads through different database presentations. The different database presentations provide different styles of viewing and manipulating the data. It resolves the heterogenous consistency problem since we only have one type of data storage that we need to keep consistent, all other databases simply subscribe to changes. They act as pipes that is part of the lens. Note however that from the client's perspective, there's no way to tell if the returned output has been piped through a series of filters or not. The client does not need to be aware of this.

Keep in mind that lenses have to be bidirectional, there cannot be a uni-directional lense. Since the single source of truth does not accept writes from the subscribed databases, then this is a unidirectional relationship. Any unidirectional relationships has to be part of a larger more abstract lens loop. This means if you have an automaton sending data to another automaton but not receiving any data back, this is not a form of lens composition, but a kind of function composition.

Another key insight is that there can be multiple clients in this system, and

each client may have different get functions. The different get functions

might be routed through a conditional lens

(see the page 117).

As you can see in the LinkedIn's diagram, all the different subscribers denote a

different get function.

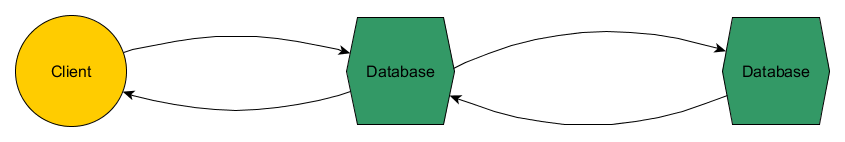

Chain Link Lenses

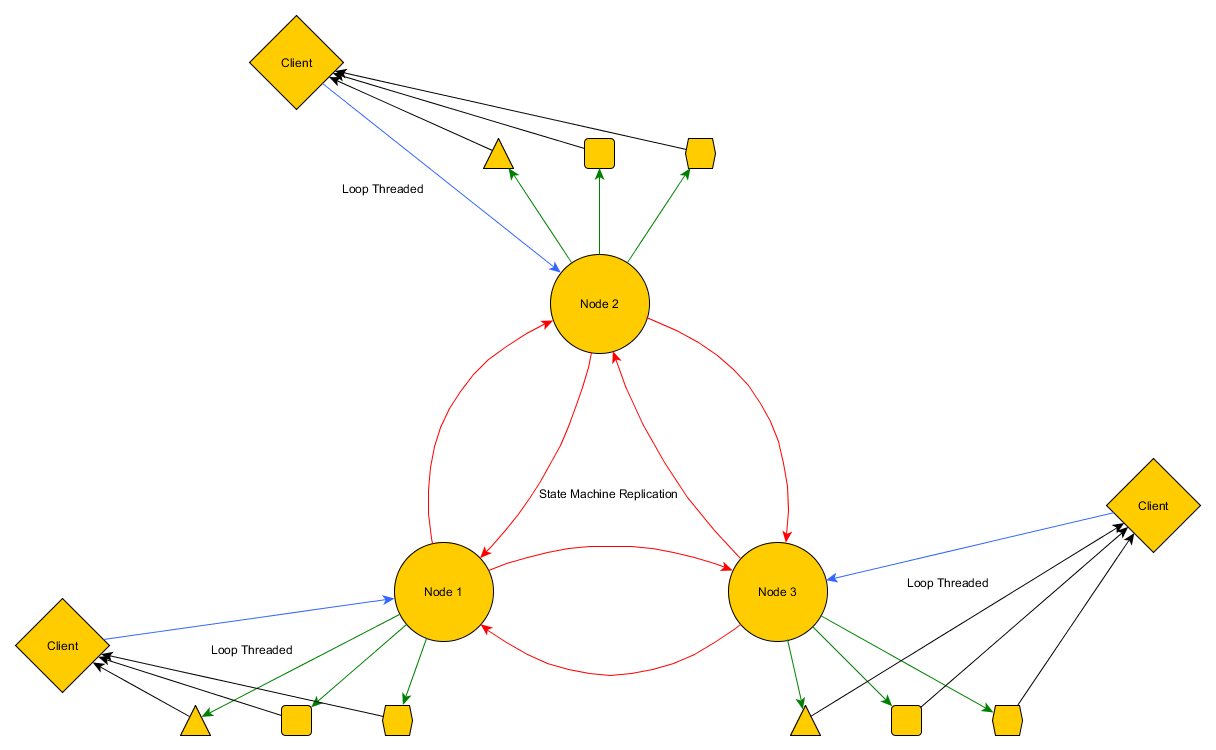

This is just lens composition applied to the distributed context. You will probably see this style of architecture often in backup systems. This is equivalent to state machine replication on an Active/Passive configuration. If we allow the client to have multiple conditional lenses to all the databases, what we end up with is a state machine replication on an Active/Active configuration. This style works great for homogenous consistency, where all the data store nodes have the same kind of interface.

A key insight at this point is to realise all 3 lens patterns are related. For example, the tree independent lens is the basic pattern that exists in all patterns, and in the Loop Threaded Lens, the single source of truth can in fact be a plurality of replicated homogenous nodes that looks like a Chain Link Lenses pattern.

Automatons

We find that "Automatons" is a suitable name for the fundamental units of computation deployed in the Matrix network. They could also be called functional closures, or black box actors with a defined interface, or a microservice, or a docker container depending on your perspective.

In the previous section on Automata Theory, I mentioned how lenses model the communication protocol between automata. One of the goals of the Forge Architect language is to reify infrastructural units (which we call Automatons) as types that can be reasoned about in a type safe manner. Lenses gives us a model to understand how the communication between automatons is a form of composition.

Composition is the key to modularity and the management of complexity. It allows us to build complex abstract things from simple reified things. Before we can continue, we need to specify that there is in fact two kinds of composition:

Automatons were first inspired by the actors inside Erlang's actor model and Object Oriented Programming's Message Passing semantics, and of course Automata theory. There's often a complaint about how actors don't compose. This is because actors generally hardcode the receivers of the messages they send to. But this isn't necessarily true. In the OOP world, there's a concept called dependency injection, where you can inject dependencies into your objects. This is equivalent to parameteric type construction or object composition. There's nothing stopping the actor model from allowing the actor dependencies to be dynamically injected, it is up to the implementation to support such an ability. Therefore dependency injection addresses dynamic object composition. Without dependency injection, object composition happens statically, or the composition is hardcoded, which makes the topology inflexible.

We still need to address functional composition. This refers to the ability to pipe the output of one function to the input of another function. Automatons can also be functionally composed, but first we need to shift our perspective and look at Automatons as if they were functional closures. This allows us to have a model that integrates object composition with function composition.

Under this model, the construction of an automaton is through a functional closure. The closure's parameters are the automaton's dependencies. The dependencies hence exist inside the referencing environment of the automaton/function. Any automatons that are within a closure, are equivalent to a nested lens between the automaton closure, and the automaton that was injected into the closure. Functionally composed automatons are not a full lens, but are part of a bigger lens.

At this point the actor model no longer applies. Instead we need to take ideas from the functional reactive programming (FRP) world. Imagine we had a number of these Automaton closures. In order to be able to functionally compose, we need to have network combinators that can explicitly redirect the input and output of each Automaton. These network combinators are derived from arrowised FRP. They are like functions/types that reify the logic in your dataflow. We could implement lens semantics via network combinators. ( From here you could add logic combinators and constraint optimisation, what you get is a dynamic type-checked distributed topology that optimises itself based on your constraints.)

To reiterate, each automaton is thus a closure that can have its input and output piped into other closures, thus forming a loop threaded lens, or they can have their dependencies injected into them (passed as parameters into the function closure), forming a dependency tree of independent lenses. When automatons are functionally composed, they embody half of the lens mirror. When automatons are objectly composed, they embody a complete lens that's nested inside the Automaton closure. The meaning behind the lens is simply that a state transformation on the target should result in an equivalent state transformation on the source, and that is all a client-server relationship amounts to.

These are the primitives that you can use to build up a Matrix functional reactive infrastructure network, layering lenses upon lenses, automatons closures upon automaton closures, until you get the final lens, where the get/set/input/output are the external API points that your customer's browser connects to.

Things you can now do that you couldn't before:

- If lenses can be reified via network combinators, they give you a type safe topology, where the Architect compiler will let you know if any infrastructure changes result in type errors. This requires reliance on the type interfaces to be written correctly, this is one of the tradeoffs we have to make if we want to support blackbox contained programs written in any language, whereas if it was all written in one language, you can have deep introspection on each program. It might be difficult without very detailed interface specifications of each Automaton. Network combinators can deal with queuing, load balancing, merging, delay.. etc.

- Network combinators are subject to optimisation via logic programming. The logical relationships can be highly abstract primitives that are actually implemented with machine learning techniques underneath.

- The functional reactive infrastructure, ignoring time and space leaks could allow you to actuall rollback the side effects of a running distributed infrastructure.

- Lenses don't specify whether the communication should be asynchronous or synchronous. This is up to the individual automata to decide. I believe that this makes lenses more generalised.

- So what's the best way to deal with consistency when using lots of different systems. I suggest the log centric infrastructure championed by LinkedIn. This becomes easier to implement when using Forge Architect. In the below diagram, the single source of truth is a set of homogenous nodes in a chain link lens configuration. Clients connect to the most optimal (possibly geographic location) node. The other shapes represent other database presentations that is functionally composed:

In summary, the Architect language allows you the define automatons as reified types which represent units of distributed closures, inject automaton dependencies into them, and wire them up using network combinators. The key thing is that the actual implementation of your automaton can be written in any language, it just needs to be put into a container, and have a written type interface attached to it. All of this done in a pure and declarative manner, giving a user immediate clarity over the purpose of the Matrix infrastructure, with a type system that gives you compile time safety when you make changes to the infrastructure, and a platform that can be subjected to machine learned infrastructural optimisation.

As a configuration management language, the Architect language focuses on configuring the automatons and the network that connects them. Ideally any machine level configuration should be abstracted and optimised based on user specified constraints by the Architect compiler.